Automatically Pre-pick Tables for AI When Working With Large Database Schemas

Artificial intelligence has a limited context window as an upper limit for how large prompts it can technically handle. For OpenAI's GPT-4o model, this is 128,000 tokens. Including large database schemas in AI prompts poses two problems:

-

including the entire database schema, if it is large enough, lowers accuracy because it includes large amounts of data that are irrelevant to the task at hand

-

including the entire database schema is expensive

To solve this, we can do an automatic pre-pick of relevant database tables. The procedure is therefore as follows:

-

the user writes his instruction as normal

-

select relevant database tables to fulfill the given instruction

-

run the final instruction with selected tables and the user's instruction

The automatic selection of database tables happens when the added database schema uses over 500 tokens (measured with a dummy tokenizer to save resources). The pre-pick threshold is set low partly because it saves tokens and thus gives users more generations while improving generations since the prompt contains less irrelevant data and is more precise.

A concrete example

A large database schema can be added in 2 ways:

-

add a connection to your database and the database schema is automatically fetched

-

import the database schema with an SQL query

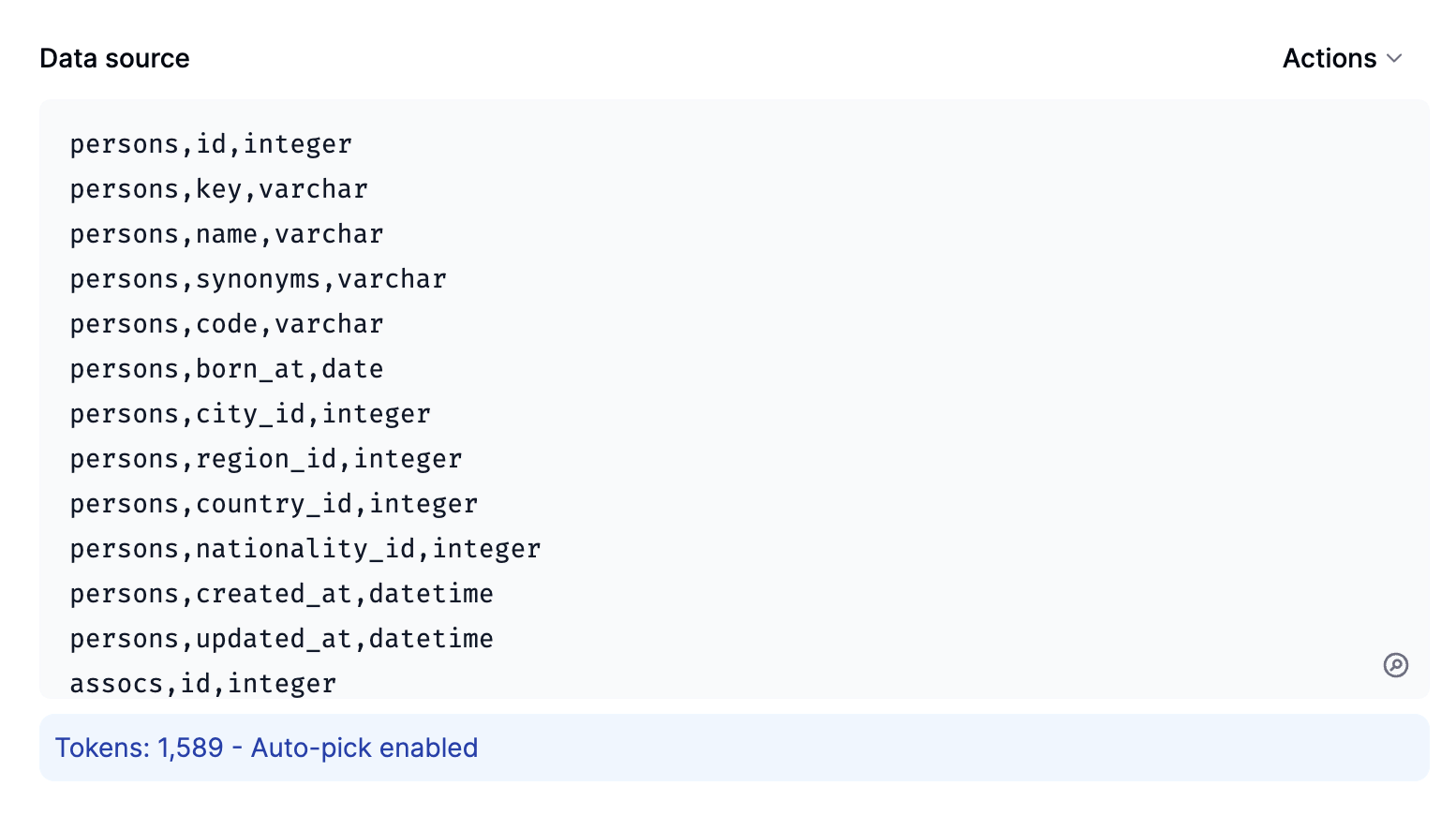

Once the database schema is added, the token usage and whether pre-picking is active can be viewed:

The above database schema is football.sql which is imported with AI importer.

Now AI can be instructed in the normal way and relevant tables will automatically be included. The various queries from views.sql can easily be recreated by simply instructing the AI (e.g. "list World Cup Brazil 2014 teams"). For this instruction AI will include the following tables:

teams, events_teams, events, games, goals, groups_teams, rosters, group_standings, group_standing_entries, events_ground

And generate the following query:

SELECT

t.title

FROM

events e

JOIN events_teams et ON e.id = et.event_id

JOIN teams t ON et.team_id = t.id

WHERE

e.key = 'world_cup_brazil_2014';

The token consumption for pre-picking tables is around 200 tokens and for the subsequent generation 1000 tokens. For such small database schemas, the savings are limited. For larger database schemas, the savings are more significant. For a database schema with 334 tables and a total token usage of 45,000 tokens (costing around $0.225), one generation costs:

- pre-picking tables 1800 tokens

- SQL query generation 3800 tokens

That's a total token savings of around 40,000 tokens per generation. Running the pre-picking generations only cost $0.03. It has been tested with a database schema containing around 600 tables and it worked. Automatic pre-picking not only makes it economically feasible to use AI on large database schemas but also technically because the prompt remains small and relevant for the desired purpose. And of course the user-friendliness is much better than having to manually pick database tables for each generation.